Because it uses machine learning to make text that sounds like it was written by a person, ChatGPT is the most impressive AI we’ve seen so far. Mistakes can happen when content is moderated, even when very complicated algorithms are used. There may be mistakes in ChatGPT’s moderation system. This detailed guide’s main goal is to look into these mistakes and show users and developers how to find, understand, and fix them.

Table of Contents

What is Error in Moderation – ChatGPT?

There are a lot of different ways that mistakes in ChatGPT moderation can show up. It’s possible for inappropriate content to get through moderation, and sometimes harmless text is marked as inappropriate by mistake. It’s important to know why these mistakes happen. Not only is it important to know that an error took place, but also to know why and how it happened and how it changed the user experience.

Potential Causes of Error in Moderation

First, it’s important to list all the reasons why ChatGPT moderation might go wrong. This will guide the resolution process. A lot of problems happen when language and context are hard to understand. There are a few common things that can go wrong when ChatGPT is moderated:

- Talking about ambiguity: What makes ChatGPT great is that it can figure out what’s going on and answer in a way that makes sense. When things aren’t clear, though, this strength can also be a weakness. It’s easy to get things wrong with language because it’s so complicated. This can cause moderation mistakes.

- Language and Context Change: Over time, both the words people use and the situations in which they are used change. There are times when ChatGPT tries to adapt to new ways of using language, but this can be a bad thing. The issue comes up when the model hears new words, slang, or language trends that shift quickly. This could lead to mistakes.

Methods to Fix Error in Moderation – ChatGPT

It takes a planned and organized approach to fix mistakes in ChatGPT moderation. Let’s look at a list of helpful troubleshooting steps that were all carefully made to make the moderation system better and cut down on mistakes so that users can have a better, more reliable experience.

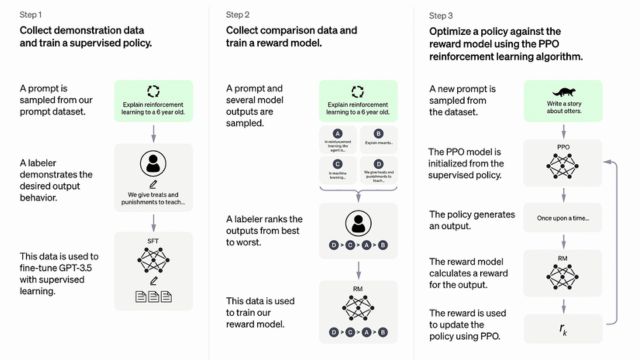

Fine-Tuning the Moderation Model

Making the moderation model more aware of its surroundings is what you need to do to fine-tune it. The moderation system can be made a lot more accurate by giving it more training data that focuses on complex situations and helps the model understand more subtleties in language.

Implementing User Feedback Mechanisms

The system for moderation is always getting better thanks to what users say. You can get users to report false positives or negatives by giving them strong ways to give feedback. When developers work directly with users, they can see in real life where the system might be failing and where it needs to be fixed.

Regularly Updating the Moderation Algorithm

As language changes, an algorithm for moderation that doesn’t change will quickly lose its use. It is important to keep the system up to date so that it can handle new language trends well. This method of updating algorithms over and over again helps ChatGPT keep up with language changes, which makes mistakes less likely.

Strengthening Ambiguity Handling

Improving how the moderation system handles ambiguity is very important if we want to fix mistakes that happen when things aren’t clear. To do this, the system needs to be made better at detecting and navigating small differences in language. This will allow moderation to give more accurate results.

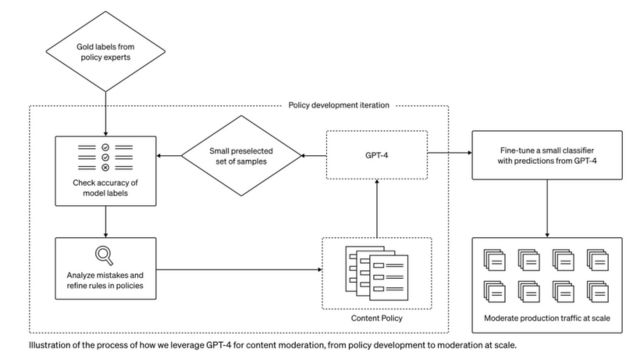

Monitoring and Analysis

The best way to avoid making mistakes is to regularly check and think about how the moderation system works. Ahead of time, developers can look for patterns and trends to find mistakes before they hurt a lot of people. This alertness makes it possible to improve the system in a way that can adapt to new problems.

Conclusion

Even though ChatGPT is a big step forward in natural language processing, it is important to remember that moderators make mistakes all the time. By following the steps for troubleshooting, developers can fix errors that are already happening and make the system for moderation stronger so that problems don’t happen again. To make the text sound more like it was written by a person, ChatGPT is a better and more reliable choice. This is because it is updated all the time, users can give feedback, and it always keeps context in mind.

Frequently Asked Questions (FAQs)

What’s wrong when the people who moderate ChatGPT mess up?

Messups in ChatGPT moderation can happen when the situation isn’t clear or when language changes over time. Because of these issues, there may be confusion, which may lower the effectiveness of the moderation system.

What can developers do to help fix mistakes in how ChatGPT is moderated?

Bugs in ChatGPT moderation can be fixed by tweaking the moderation model, giving users more powerful ways to provide feedback, regularly updating the moderation algorithm, making it better at handling ambiguity, and constantly watching and analyzing.

When you’re moderating, why is it important to know what’s going on?

When you are a moderator, it is very important to know the background of the language to make sure it is understood correctly. It is less likely that false positives or negatives will happen in the moderation process if developers make the system better at understanding complicated situations.

What can people do to help make the moderation process better?

Moderation can be made more accurate with the help of feedback from users in certain ways. When you report false positives or negatives, you help the developers improve the system. This makes the moderation system better all the time.

Does moderation need to be watched all the time for it to work?

Yes, moderation does need to be constantly watched over. When developers regularly check the moderation system’s work, they can spot patterns of mistakes and make changes at the right time, which maintains a high level of moderation accuracy.