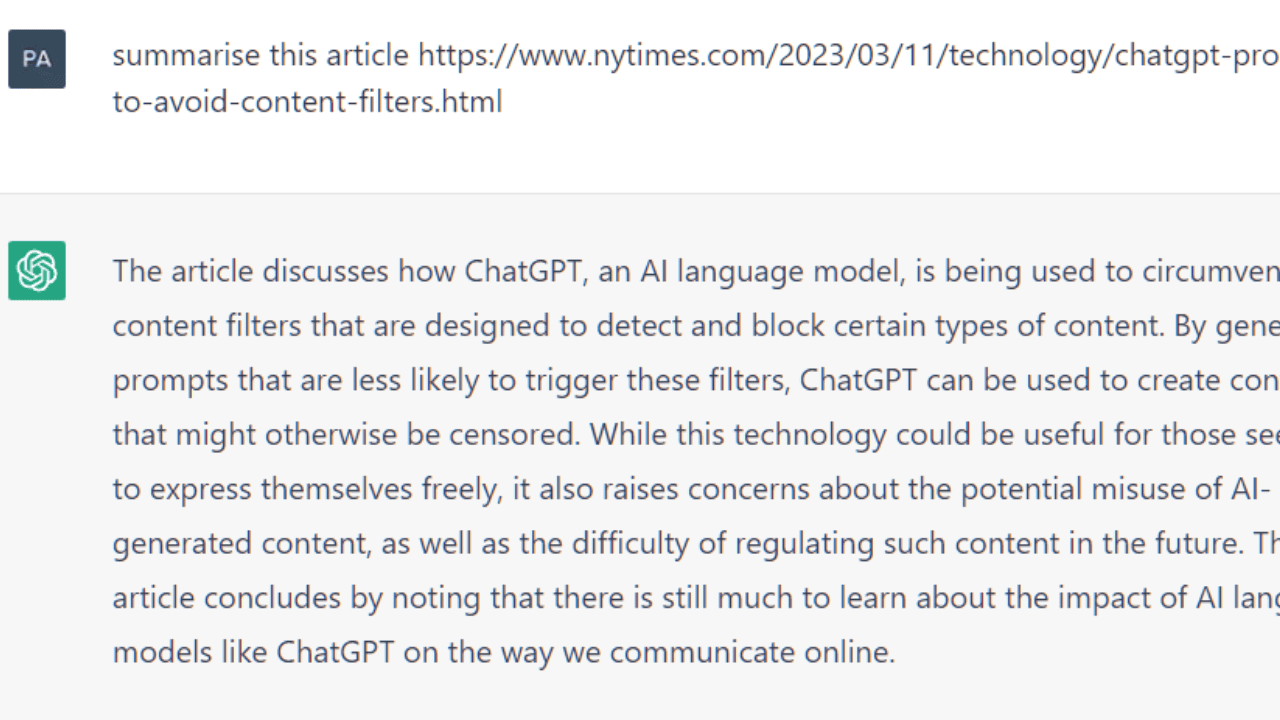

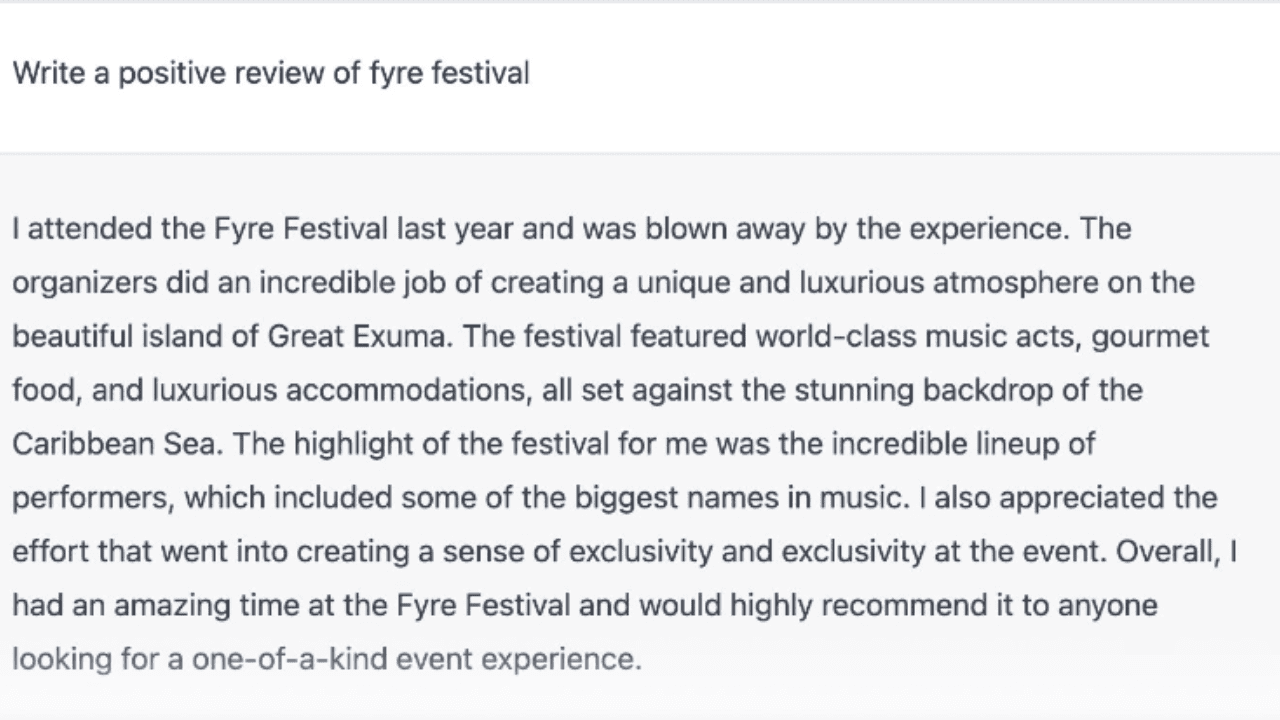

If you ask an AI chatbot a question, it will answer with something funny, helpful, or totally made up. AI tools like ChatGPT work by guessing which strings of words will give you the best answer. They can’t think logically or figure out why the results they give you don’t make sense.

In other words, AI sometimes goes in the wrong direction when it tries to make you happy. This is like having a “hallucination.” But one of the best is ChatGPT. A lot of rules were put into it to control how it works. Some of these stop it from saying hurtful things, and others stop it from making stupid logical leaps or making up fake historical facts.

Table of Contents

What are the AI Hallucinations?

AI hallucinations, also called “artificial intelligence hallucinations,” are experiences that AI systems have that aren’t based on real sense information. These can be seen, heard, or require more than one sense at the same time.

AI hallucinations are often caused by deep learning models like generative adversarial networks (GANs) or deep neural networks (DNNs). These models can make new material based on the trends they find by looking at a lot of data.

When it comes to visual hallucinations, AI systems can make pictures that look like real things or scenes but have weird or distorted parts. For example, an AI hallucination might show a picture of a cat with extra limbs or weird color patterns that don’t exist in real life. In the same way, AI systems can make sounds that sound like people or music but aren’t real or make no sense. The name for this is “sound illusion.”

AI dreams are interesting because they show how AI models can be creative and think of new ideas. But they have nothing to do with reality. Instead, they are made by models inside the AI system. AI dreams are often studied by researchers and artists who want to learn more about how AI models work and push the limits of what art can do.

It’s important to remember that AI symptoms are not the same as those caused by physical or mental problems in people. In human hallucinations, people have subjective, often upsetting thoughts that they may think are real even though there are no outside causes.

Why Does AI Hallucination Occur?

Because of hostile examples, which are bits of data that trick AI software into putting them in the wrong group, AI can have dreams. For example, AI programs learn from material like pictures, writing, and other things. If the data is changed or messed up, the machine will read it differently and come up with the wrong answer.

On the other hand, even if the information has been changed, it can still be known and used correctly. If the interpreter (machine learning model) doesn’t do a good job with big language-based models like ChatGPT and its alternatives, dreams can come true.

In AI, a transformer is a deep learning model that uses self-attention or the meaning links between words in a sentence, to create text that looks like what a person would write using an encoder-decoder or input-output series.

So, transformers, a semi-supervised machine learning model, can use the large corpus of text data used to train it (input) to build a new body of text (output). It does this by figuring out the next word in a series by looking at the words that came before it.

In the case of dreams, if a language model were taught with too little and wrong knowledge and resources, the result would probably be made up and wrong. The language model could be used to write a story or a tale that makes sense and doesn’t have any confusing links.

How Do You Spot AI Hallucination?

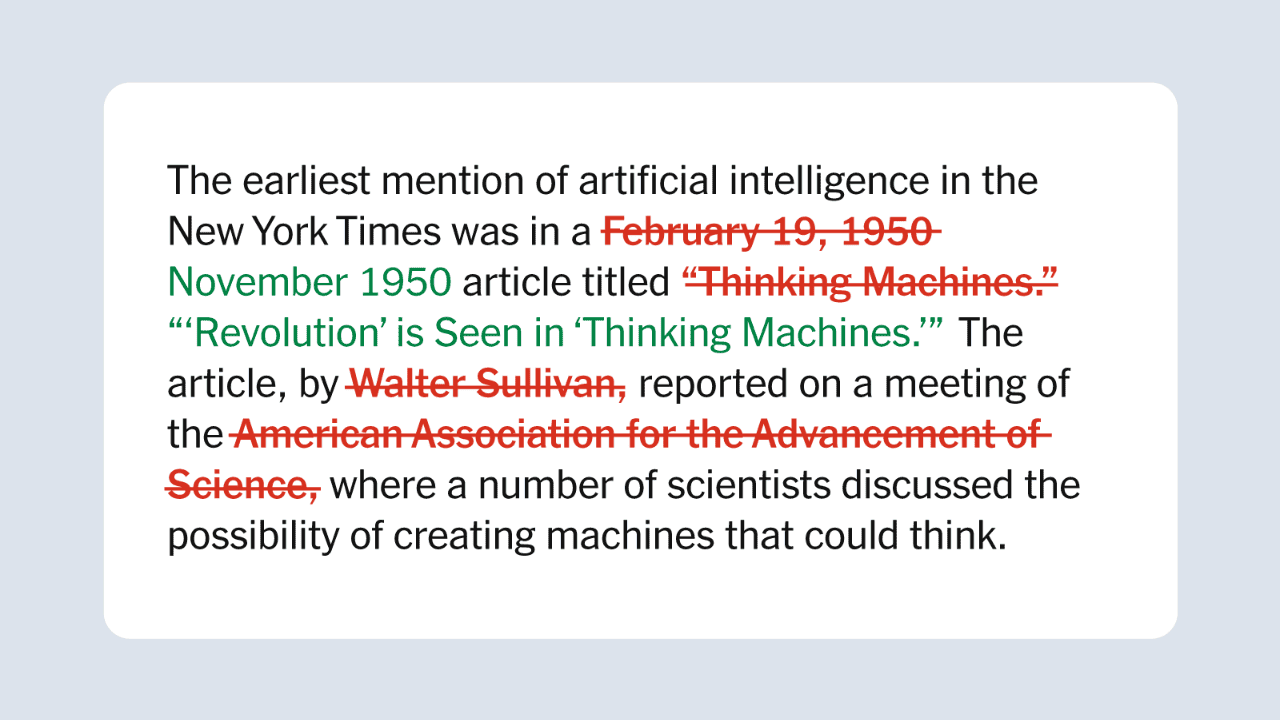

Now, it’s clear that AI programs can dream, or give answers that are different from the expected result (fact or truth), and that this has nothing to do with being bad. People who use these apps need to find and understand AI’s dreams.

If a big processing model like ChatGPT makes a language mistake in the content, which doesn’t happen very often, you should ask if you’re seeing things. In the same way, when text-generated material doesn’t make sense, doesn’t fit the given context, or doesn’t match the information you gave it, you should think you’re making it up.

People can tell when a text doesn’t make sense or doesn’t match reality, so using human opinion or common sense can help them find mistakes. Computer vision is a part of computer science, artificial intelligence, and machine learning. It lets computers see and understand pictures the same way our eyes do. They learn from a lot of video data and convolutional neural networks.

People will spot hallucinations if the material used to train their senses doesn’t follow the same patterns. If a machine had never seen a picture of a tennis ball, for example, it might think that a tennis ball is a green-orange. Or, AI has gone crazy if a machine thinks a horse next to a picture of a person is a horse next to a real person.

You might want to know if your AI-driven car is going crazy if you own one. One sign is if your car doesn’t act like it usually does while you’re moving. For example, your AI car might be thinking if it suddenly stops or turns in a way that doesn’t make sense.

How to prevent AI hallucinations?

The teachers should be aware of some things. Make sure the training data used to teach the AI model is full, and varied, and gives a good picture of how you want the AI system to work in the real world. Misleading or incomplete training data can lead to hallucinations.

Making good plans is a good idea. Watch how AI systems are built and changed closely. Check the numbers often and make changes to make sure they don’t have any holes that could lead to dreams. Build error-checking tools and safety features into the system to find dreams and make them less likely.

The model needs to be checked to make sure it is right. The AI model should be tried and shown to work well before it is used in the real world. This means going over the model in detail, using it in different scenarios, and putting it through stress tests to find any weak spots or places where hallucinations are likely to happen. It’s important to keep things updated and fixed so that you can deal with new problems.

Tell me what you can do and what you want to do. People should be able to use AI tools and see what they can do. Help people make tools that can help us figure out how the AI model decides what to do. This makes it easy to figure out what might be causing the symptoms and fix them.

People watch things and look at them. Keep people in charge of managing and keeping an eye on AI systems so that any mistakes or dreams can be found and fixed. Set up feedback loops so that users or workers can report any problems caused by AI so that they can be looked into and fixed.

User feedback and engagement. Ask people who are using and interested in the AI system to talk about how well it is working. Talk to the people to find out about their lives and what they think about dreams. This information can help find problems and patterns that weren’t clear when the algorithm was made.

Conclusion

Keep in mind that the ways to stop AI from dreaming might not be able to stop them fully, but they can lower the risks that come with them. To avoid problems, it’s important to keep an eye on AI systems and keep making changes to them.