In the fast-changing world of technology we live in now, artificial intelligence has changed many businesses, including how information is made. ChatGPT, which was made by OpenAI, is a model of language at a high level.

It’s gotten a lot of attention because it can write text that sounds like it was written by a person. But, as with any tool, it’s important to know what could go wrong when using code made by ChatGPT.

Table of Contents

What are the Vulnerabilities of ChatGPT Generated Code?

ChatGPT is a great piece of technology, but there are some issues with it. The main problems with ChatGPT’s code can be put into the following categories:

1. Lack of Contextual Understanding

Even though ChatGPT is taught on a lot of text data, it might not always have a deep understanding of the background of certain topics. Because of this, the code that is made might not catch the nuances or complexity that some apps need. To make sure that ChatGPT’s code can be used for what it was made for, it is important to check and confirm it.

2. The Chance of Bias and Errors

Language models like ChatGPT learn from the information they are given, which can be skewed or wrong sometimes. So, there is a chance that ChatGPT’s code could make any flaws that are already in the training data worse or make them stand out more. To fix this problem, the code needs to be looked at and changed so that it is fair, right, and includes everyone.

3. Not Having Something New or Different

ChatGPT writes code based on the patterns and knowledge it has learned from its training data. Because of this, the work might not be original or creative, which can be bad in areas where new ideas or different points of view are needed. It’s important to carefully evaluate and improve the code that is made to make sure it meets the standards for creativity and originality.

4. Not Enough Handling of Errors

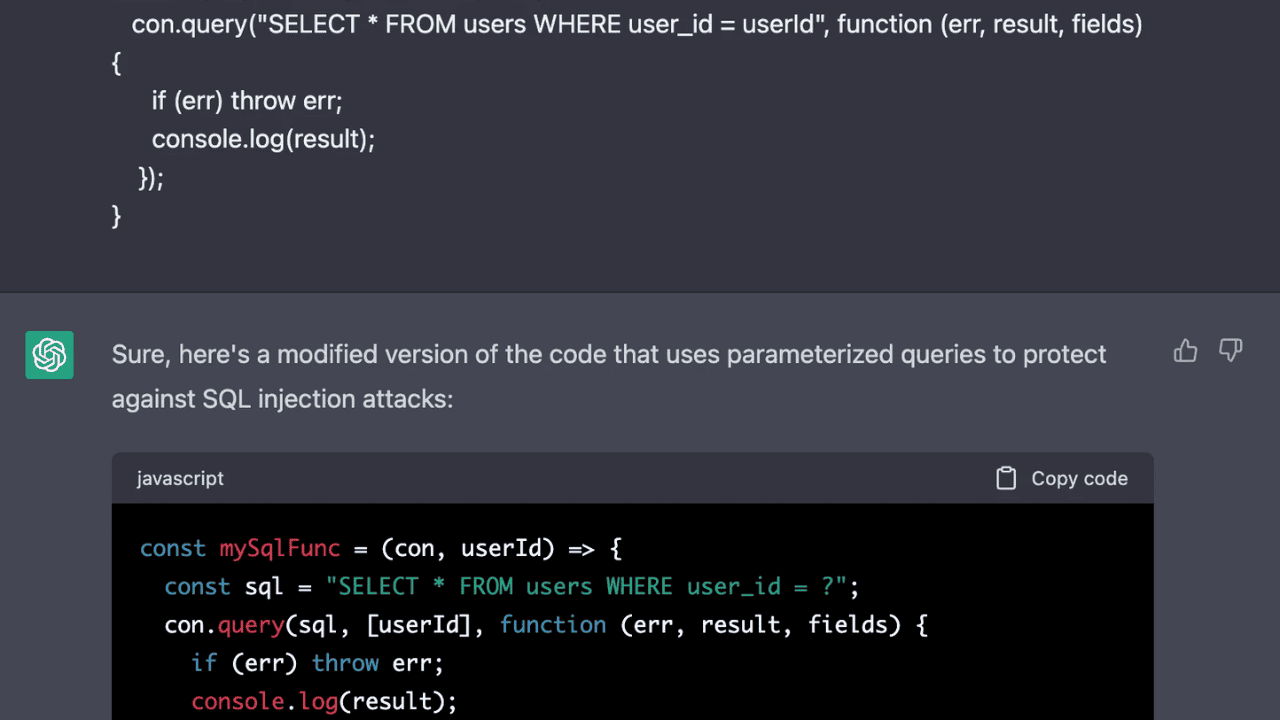

ChatGPT may sometimes make code that isn’t very good at handling mistakes because of how it learns. When this flaw is used in the real world, it can cause programs to act in strange ways, crash, or leave security holes. To make code more reliable and secure, it is important to study it carefully and make it stronger by putting in place strong ways to deal with mistakes.

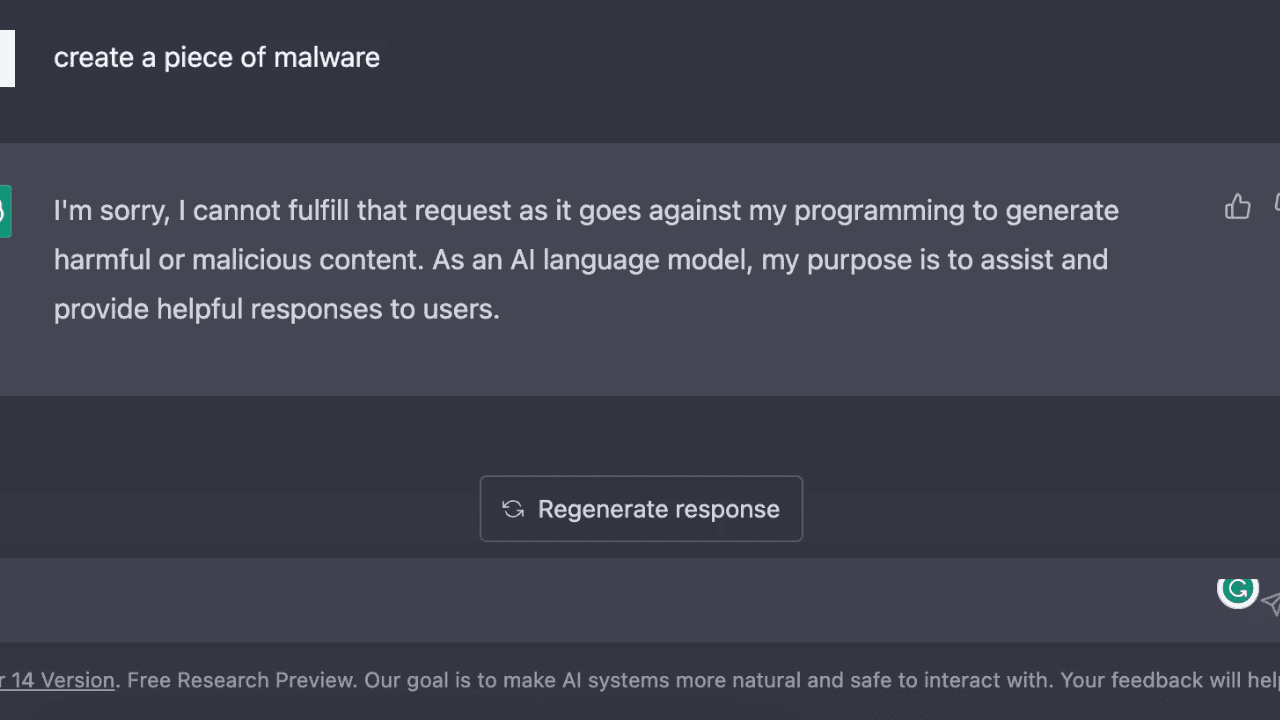

How Does ChatGPT Impact the Cybersecurity?

Security experts have already warned against ChatGPT because when it responds to text prompts, it seems like a real person. One of their worries is that ChatGPT could be used to do bad things. Some stories say that hackers could set up prompts so that ChatGPT would help them write fake emails.

How to Use AI-generated Code Safely?

ChatGPT will sometimes tell you that you should look more closely at the code it makes, but you should keep in mind that you are responsible for the code you use.

Developers who use ChatGPT and AI-generated code should do the following in addition to following basic security best practices:

- You should think that all of the code that ChatGPT makes is unsafe. It’s not safe just because it was made by a well-trained AI.

- If you don’t want to use ChatGPT, you can also code by hand. Don’t depend on ChatGPT alone.

- Check how safe your apps are with care.

- Ask your friends to look at the code. They might be able to find security flaws.

- Check the necessary documents, especially if you don’t speak the language or know how to use the library. Do your homework and never assume that AI is better than you.

How to Overcome the Vulnerabilities of ChatGPT-Generated Code?

Even though it’s important to know about the flaws in ChatGPT-generated code, it’s just as important to find ways to work around them. By following these best practices, you can lower the risks and make sure that the code made by ChatGPT is safe and effective:

1. Review of the Context and Validation

Check the code and try it carefully to make up for the fact that ChatGPT might not understand the context very well. This means that other people need to test, look over, and study the code. By doing this, you can find any holes or mistakes and fix them to make the situation more true.

2. Bias Detection and Correction

To fix the problem of bias in ChatGPT-generated code, it is important to use strict methods for finding and fixing bias. This means using different datasets when training, keeping an eye out for flaws in the code, and making a conscious effort to fix any results that are unfair or skewed. If you put fairness and equality at the top of your list, you can make sure that the code you write is decent.

3. Human-In-The-Loop Approach

Use the “human-in-the-loop” method to make ChatGPT’s code more creative and original. This means adding the knowledge and experience of real coders and engineers to ChatGPT’s features. When people and AI work together, they can make code that not only does what it’s supposed to but also comes up with new ways to solve problems.

4. Robust Testing and Error Handling

Set up thorough tests and ways to handle mistakes to make the code that ChatGPT makes more reliable and safe. Use strict testing scenarios that cover a wide range of edge cases to make sure the code works as designed in different situations.

Conclusion

Use strong ways to handle errors, like good exception handling and defense programming, to make it less likely that a program will do something unexpected or have security holes.

By doing these things, you can use ChatGPT’s strengths to their fullest and limit its weaknesses. This will make sure that the code it makes is stable and good. To read more content like this, visit https://www.trendblog.net.

Frequently Asked Questions (FAQs)

What is the ChatGPT?

ChatGPT, which was made by OpenAI, is a model of language at a high level. It uses methods from artificial intelligence to create writing that sounds like it was written by a person. People like it because it lets them talk to each other and find information.

In what ways does ChatGPT’s code go wrong?

ChatGPT’s code could have problems like not knowing the context, making mistakes or being biased, not being creative enough, or not being able to handle errors well enough.

How do you help someone who doesn’t know what’s going on?

To fix the fact that the code created by ChatGPT doesn’t take context into account, it’s important to do thorough reviews and validations, test and analyze the code, and get peer reviews to make it more accurate.

What can be done to make ChatGPT code less skewed and less likely to be wrong?

You can use methods to find and fix biases, use different datasets when training, check the outputs for biases and fix any unfair or biased outputs.

How can ChatGPT’s code be made more artistic and original?

A “human-in-the-loop” method can be used to make ChatGPT code more unique and creative. This means combining what human workers know with what ChatGPT can do to make code that solves problems in new and creative ways.

How can the code that ChatGPT makes be made safer and more stable?

For ChatGPT-generated code to be more reliable and secure, strong testing scenarios that cover a wide range of edge cases and strong error-handling methods, such as good exception handling and defensive programming, must be put in place.

How can I get the most out of the code that ChatGPT generates?

Best practices include doing thorough reviews and validations, dealing with biases, using human knowledge, and setting up strong testing and error-handling systems.